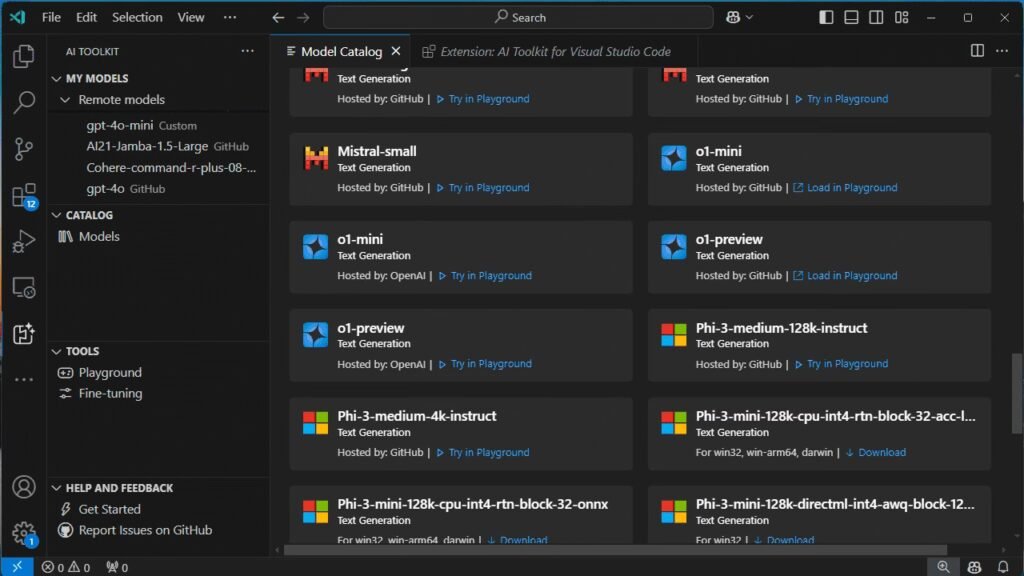

The latest October 2024 update to the AI Toolkit in Visual Studio Code is a game-changer for developers, researchers, and AI enthusiasts. Packed with groundbreaking features, this release revolutionizes how users interact with generative AI models, offering unparalleled flexibility, performance, and accessibility. Among the most exciting updates: support for Ollama models.

Unlocking Multi-Model Power in VS Code

This update introduces multi-model support, empowering developers to leverage a variety of cutting-edge AI models side by side. Here’s what’s new:

- GitHub Models: Now in public preview, GitHub developers can access top AI models directly within their favorite tools.

- ONNX Optimized Models: Lightweight, high-performance models like the Phi family, optimized for local CPU or GPU environments, are now available.

- Anthropic Claude & Google Gemini: Integrate these advanced models effortlessly using API keys.

- Ollama Models: For developers prioritizing local model hosting, Ollama runtime support has been seamlessly added to the toolkit.

- Custom Models: Bring your own OpenAI-compatible models by specifying custom endpoints within the AI Toolkit playground.

Why Ollama Support is a Big Deal

The inclusion of Ollama models in VS Code opens new possibilities for developers who prefer running AI models locally. This capability is especially valuable for those who prioritize privacy, efficiency, or offline capabilities. Whether you’re debugging, generating code, or evaluating outputs, Ollama offers a reliable, high-performance solution without relying on external servers.

How Multi-Model Use Works

Users can now combine multiple models in a single session to optimize workflows. For example, one model could handle code generation while another ensures quality assurance. This synergy allows developers to harness the strengths of various AI tools within an intuitive interface.

A New Era of AI Development

The AI Toolkit in Visual Studio Code has taken a significant step forward with this update. Whether you’re experimenting with cutting-edge AI or seeking efficient, locally hosted solutions like Ollama, this release sets the stage for a more productive and flexible development experience.

To explore these features, update your AI Toolkit and start building with the power of Ollama and other leading AI models—directly in Visual Studio Code.