Qwen QwQ is an experimental research model focused on advancing AI reasoning capabilities. QwQ-32B-Preview is an experimental model from the Qwen Team, designed to explore advanced AI reasoning capabilities. While it shows potential, it is still in the research phase and comes with several notable limitations.

ollama run qwqKey Challenges

- Language Mixing & Code-Switching: The model may unpredictably blend or switch between languages, which can impact the clarity of its responses.

- Recursive Reasoning Loops: It sometimes falls into circular reasoning, generating lengthy answers that lack definitive conclusions.

- Safety Concerns: Enhanced safety protocols are needed to ensure secure and ethical use. Users are advised to approach deployment cautiously.

- Performance Gaps: While excelling in mathematics and coding tasks, the model has areas for growth, particularly in common-sense reasoning and subtle language interpretation.

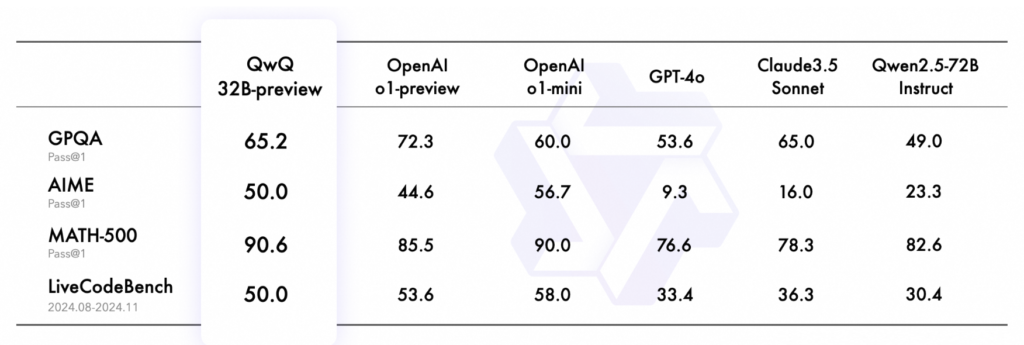

QwQ demonstrates remarkable performance across these benchmarks:

- 65.2% on GPQA, showcasing its graduate-level scientific reasoning capabilities

- 50.0% on AIME, highlighting its strong mathematical problem-solving skills

- 90.6% on MATH-500, demonstrating exceptional mathematical comprehension across diverse topics

- 50.0% on LiveCodeBench, validating its robust programming abilities in real-world scenarios.

Specifications

- Type: Causal Language Model

- Development Stage: Pretraining & Post-training

- Architecture: Transformers with RoPE, SwiGLU, RMSNorm, and Attention QKV bias

- Parameter Count:

- Total: 32.5B

- Non-Embedding: 31.0B

- Structure:

- 64 Layers

- 40 Attention Heads for Q and 8 for KV (GQA)

- Context Length: Full 32,768 tokens

While QwQ-32B-Preview excels in analytical domains like math and coding, ongoing research aims to address its limitations and refine its broader reasoning abilities. This preview represents a promising step in the evolution of advanced AI models.

For more details, please refer to our blog. You can also check Qwen2.5 GitHub, and Documentation.