In the world of AI and large language models (LLMs), Ollama has emerged as a user-friendly tool for running these powerful models locally on your computer. Recently, during a visit to the LangChain offices, I came across Ollama, thanks to a cute sticker that piqued my interest. Upon asking, I learned that Ollama allows users, especially those who aren’t tech-savvy, to easily install and run large language models on their devices. This intrigued me as someone who typically relies on cloud-based models rather than local ones. After spending around ten days with Ollama, I found it to be a valuable tool worth exploring.

Running Large Language Models Locally with Ollama

Ollama is designed to simplify the process of running large language models on MacOS and Linux. While Windows support is expected soon, the current focus is on making it easy for users to download and run models like LLaMA-3, CodeLLaMA, Falcon, Mistral, and other fine-tuned versions like Vicuna and WizardCoder. (Full list of supported models you can find on Model Library page). The tool offers a straightforward way to set up these models without needing extensive technical know-how.

The installation process is simple. After downloading Ollama from the official website, you can easily install it on your machine.

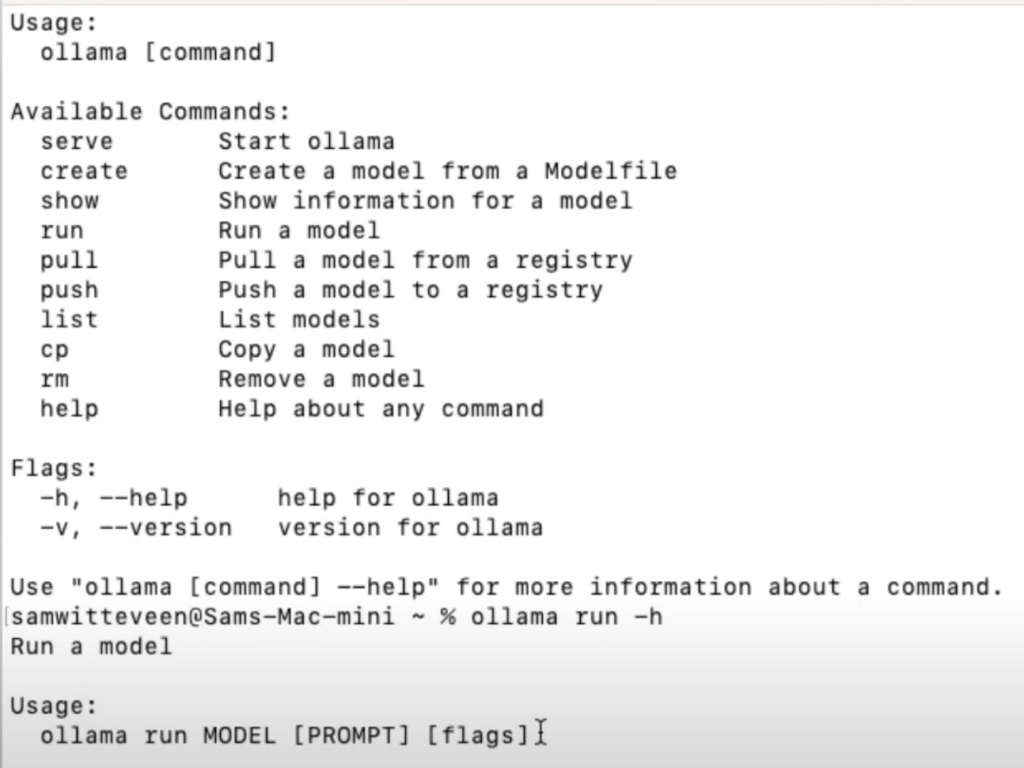

Once installed, Ollama serves as an API, enabling you to run various models directly from your command line. This is particularly useful for those unfamiliar with model deployment, as it requires minimal setup to get started.

Customizing and Running Models with Ollama

One of the standout features of Ollama is the ability to customize models with specific prompts. For instance, you can create a custom model file and define parameters like temperature and system prompts.

In one example, I created a custom prompt called “Hogwarts” that transformed the LLaMA-2 model into a version of Professor Dumbledore, answering questions related to Hogwarts and wizardry. This level of customization allows you to tailor the models to specific needs or creative projects.

Running models with Ollama is intuitive. You can easily download models, check their status, and even track performance metrics like tokens per second. If you need to remove a model or switch between different ones, the command line interface makes it a breeze.

The Future of Ollama and Its Impact on Model Accessibility

Ollama represents a significant step forward in making large language models more accessible to a broader audience. By offering a user-friendly interface and supporting a range of models, it opens up possibilities for individuals and businesses to explore the power of AI without the complexities of cloud-based solutions. As Ollama continues to develop, including the upcoming Windows support, it is poised to become an essential tool for anyone interested in leveraging AI locally.

Whether you’re a seasoned developer or someone new to the world of AI, Ollama offers a gateway to explore and experiment with large language models on your terms. As I continue to explore its capabilities, I look forward to sharing more insights and tutorials on how to make the most of this exciting tool.

Author: Sam Witteveen