aya-expanse

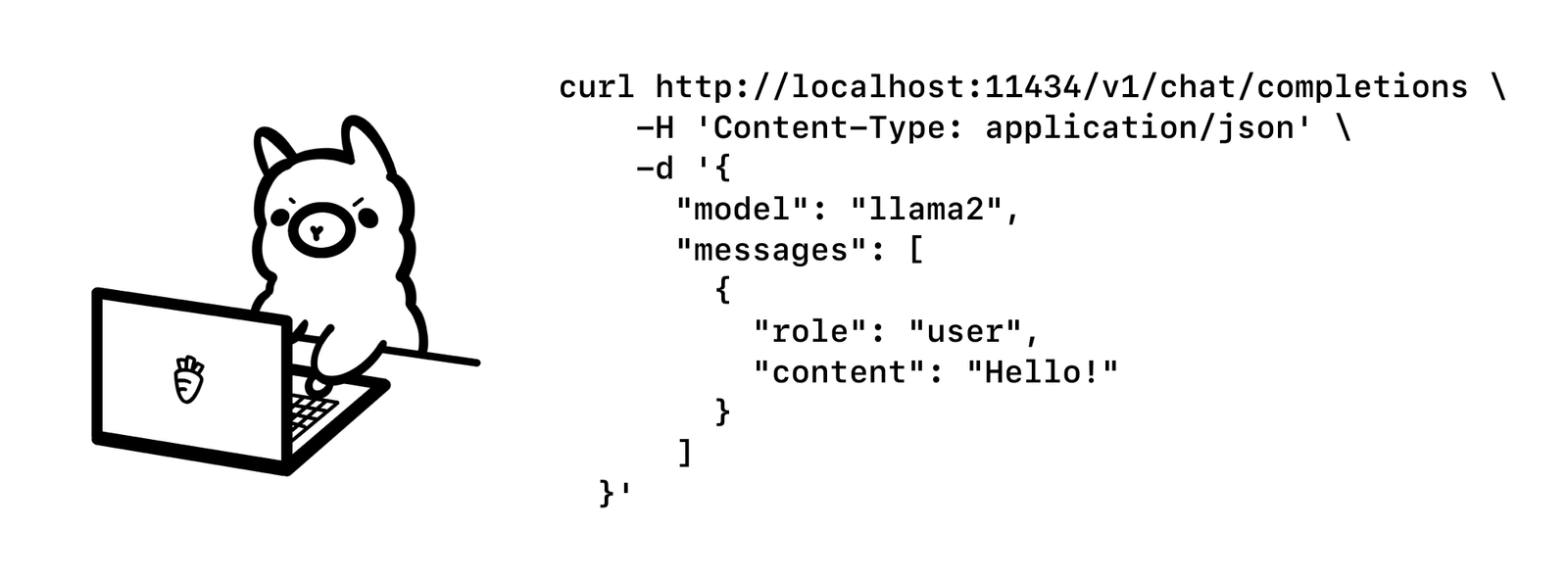

Aya Expanse marks a major breakthrough in multilingual AI capabilities. It combines Cohere’s Command model family with a year of dedicated research in multilingual optimization, resulting in powerful 8B and 32B parameter models that excel at understanding and generating text across 23 languages, while delivering high performance across the board. Run aya-expanse with Ollama: Key … Read more