A cutting-edge 12B model featuring a 128k context length, developed by Mistral AI in partnership with NVIDIA.

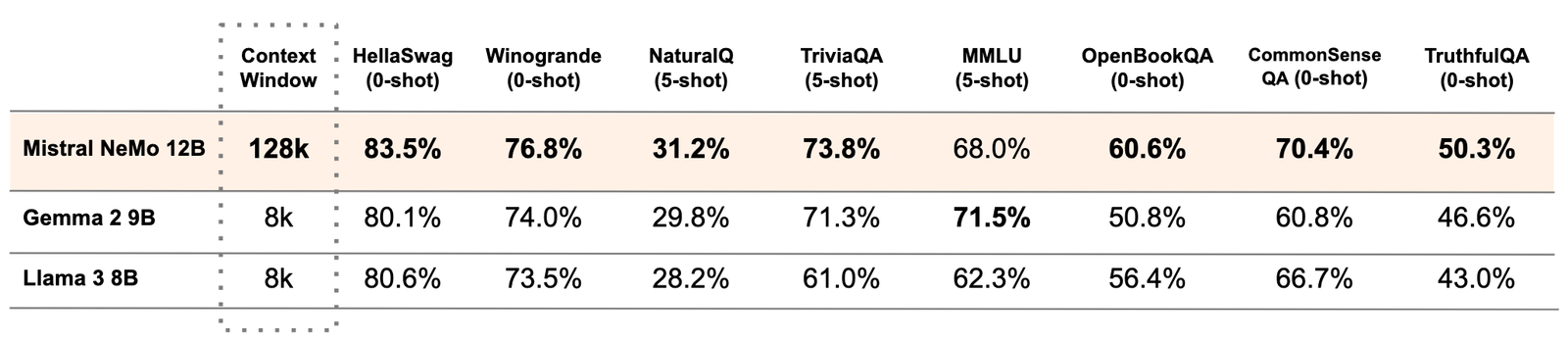

Mistral NeMo is a 12B model built in collaboration with NVIDIA. Mistral NeMo offers a large context window of up to 128k tokens. Its reasoning, world knowledge, and coding accuracy are state-of-the-art in its size category. As it relies on standard architecture, Mistral NeMo is easy to use and a drop-in replacement in any system using Mistral 7B.

Run Mistral-nemo on Ollama

ollama run mistral-nemo