Ollama UI is a fully-featured front end for large language models (LLMs). It is an open-source platform that allows you to work with both local and open-source models. In this article, I’ll walk you through its features and how to set it up, so let’s dive in.

What Is Ollama UI?

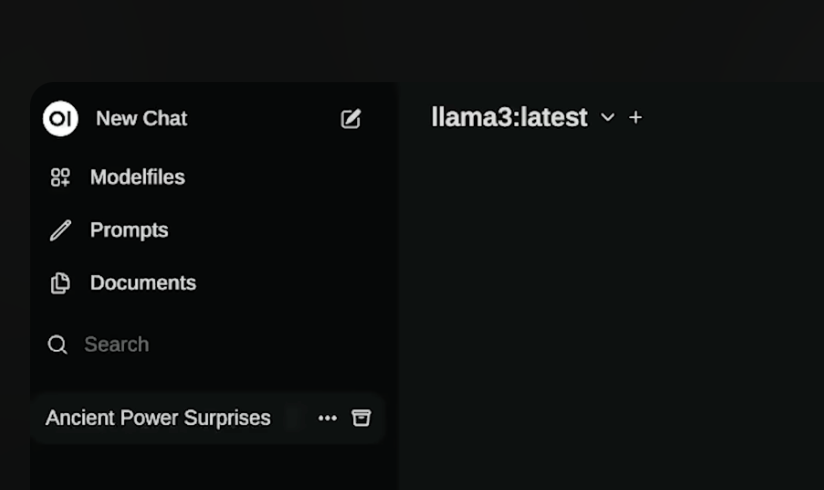

OpenWebUI for Ollama is an open-source web user interface that feels very similar to ChatGPT, with some key differences. Unlike ChatGPT, Ollama UI runs entirely locally on your machine, as shown by the Local Host 3000 address. It’s compatible with popular LLMs such as LLaMA, and currently, I’m using the LLaMA 3 model with 8 billion parameters. The inference speed is incredibly fast, which is one of the standout features.

The interface is user-friendly, offering pre-defined prompts and even allowing you to save prompt templates for future use. You can import other users’ prompts from the community, and the system also supports voice commands, file uploads, and document integrations.

Setting Up Models and Prompts

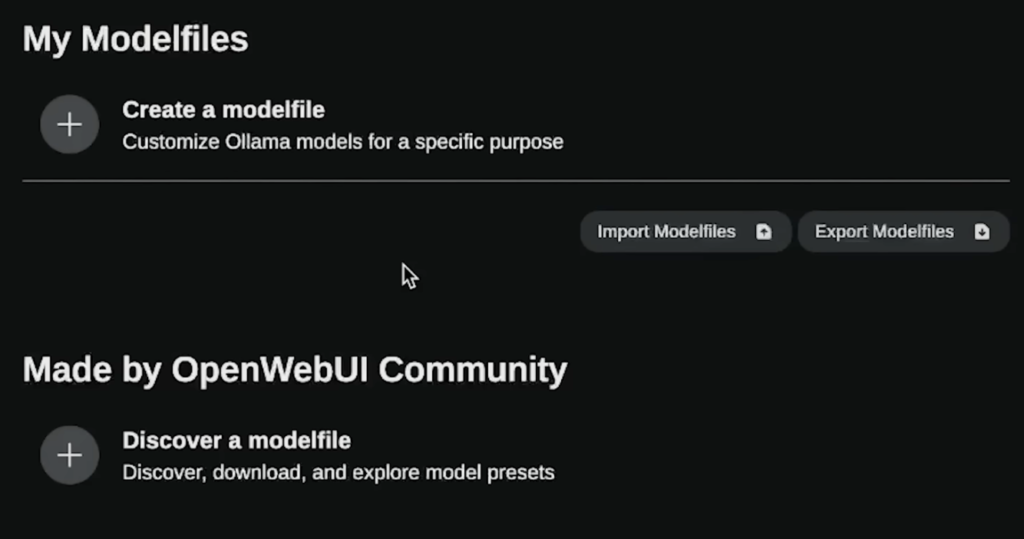

Ollama UI enables you to load multiple models simultaneously. The platform uses model files, which are preset configurations for how a model behaves, including system prompts and guardrails. You can download these model files from the community or create your own.

The prompt system is just as versatile. You can save commonly used prompts, create new ones, or import prompts made by others. The UI even suggests prompts for you, and all of your saved prompts can be managed easily through the interface.

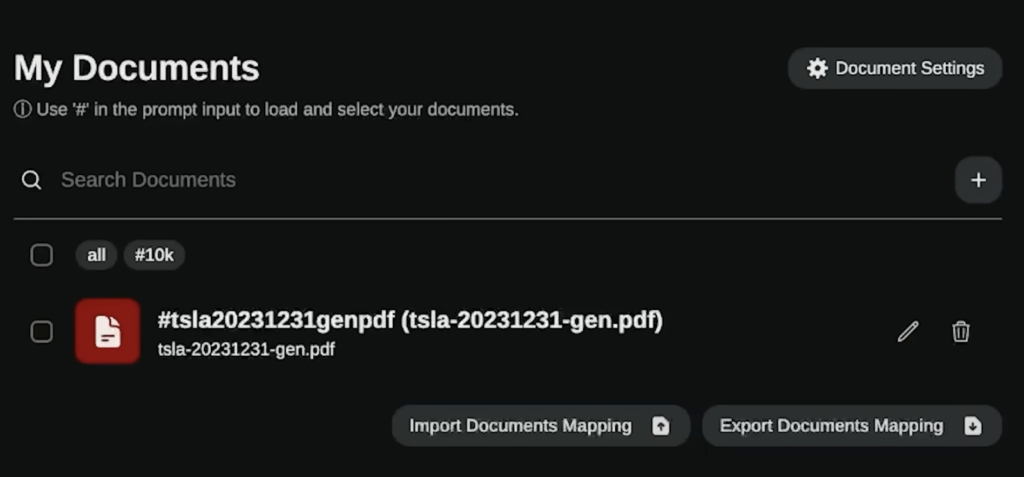

Document Management and Embeddings

One of the standout features is document integration, allowing Ollama UI to work like a locally implemented retrieval-augmented generation (RAG) system. You can upload documents, like the Tesla 10K file in my example, and reference them directly in your prompts using a simple pound sign.

It also supports different embedding models, such as the Sentence Transformers, which can be downloaded and run locally. This allows you to fine-tune document management by adjusting chunking size and overlap, making Ollama UI a robust solution for working with long documents.

Installation Guide

Setting up Ollama UI is simple but requires two essential tools: Docker and Ollama. If you don’t have these installed yet, download and set them up first. Afterward, clone the open-web UI repository from GitHub using the following steps:

- Open your terminal and run

git clone https://github.com/open-webui/open-webui. - Navigate into the directory:

cd open-web-ui. - Use Docker to run the system by entering the command listed in the installation instructions.

- If Ollama is on your computer, use this command:

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

After completing these steps, go to Local Host 3000 in your browser to access the UI. You will need to sign up, but this is all done locally and doesn’t require external registration. Once logged in, you can load your LLaMA models or any other supported models.

Check out OpenWeb UI Documentation.

Check out Ollama UI for an all-in-one solution for running large language models locally. Its rich feature set, including document management, prompt templates, and multiple model support, makes it a powerful tool for AI enthusiasts and developers.