SmolLM2 is a family of compact language models available in three size: 135M, 360M, and 1.7B parameters.

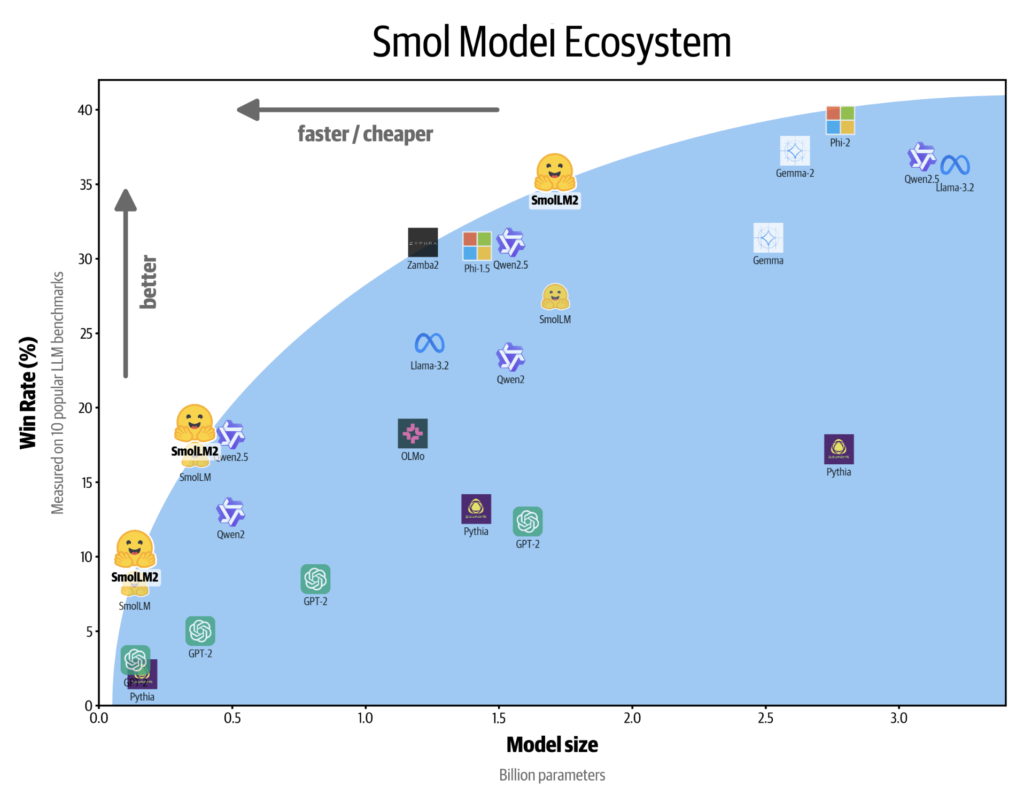

SmolLM2 is a series of compact language models offered in three sizes: 135M, 360M, and 1.7B parameters. These models are designed to handle a broad spectrum of tasks while remaining lightweight enough to operate directly on-device.

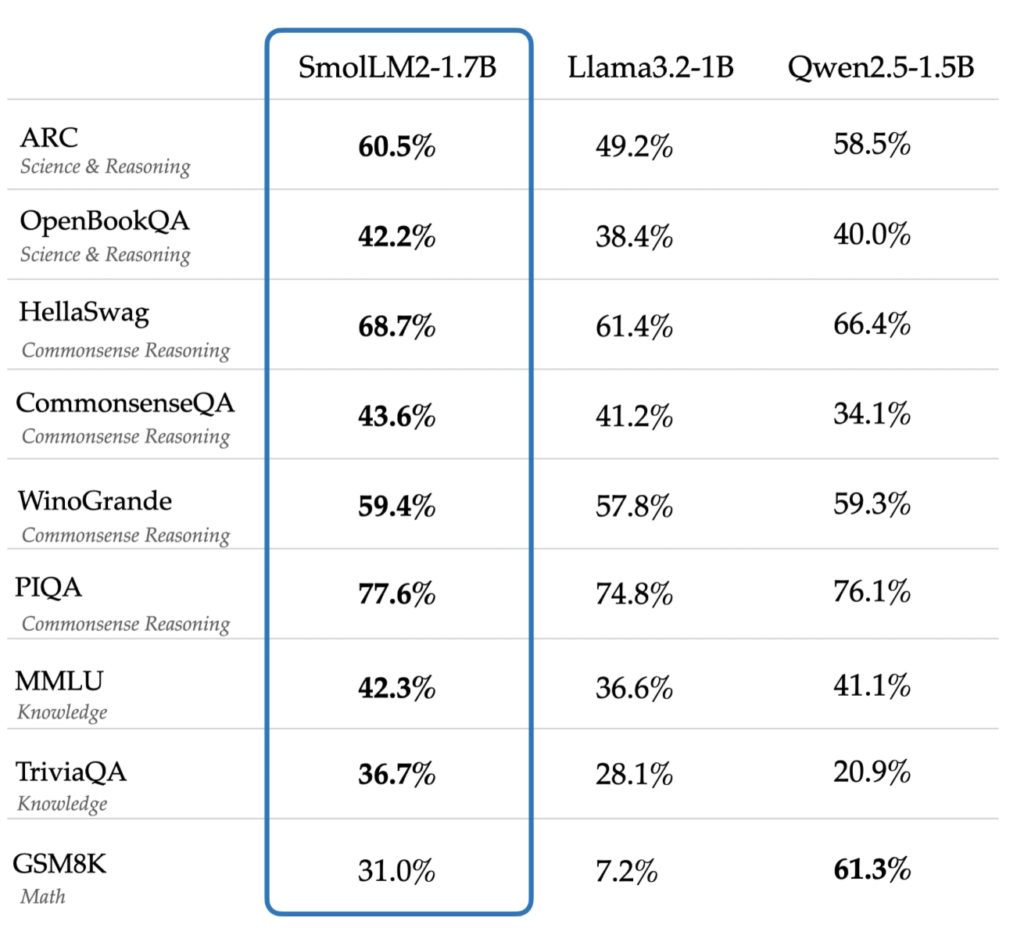

Benchmark results highlight the advances made with SmolLM2. Scoring 56.7 on IFEval, 6.13 on MT Bench, 19.3 on MMLU-Pro, and 48.2 on GMS8k, SmolLM2 delivers competitive performance that often equals or surpasses the Meta Llama 3.2 1B model. Its compact structure is optimized for environments where larger models would be impractical, making it particularly valuable for industries focused on real-time, on-device processing and low infrastructure costs.

SmolLM2 combines high performance with a compact design, ideal for on-device applications. With models ranging from 135M to 1.7B parameters, SmolLM2 offers flexibility without sacrificing speed or efficiency, making it well-suited for edge computing. Capable of handling text rewriting, summarization, and advanced function calls with improved mathematical reasoning, SmolLM2 is a cost-effective choice for on-device AI. As compact language models gain traction for privacy-conscious and latency-sensitive applications, SmolLM2 establishes a new standard in on-device NLP.

Run on Ollama

ollama run smollm2