Ollama is an application built on llama.cpp, enabling direct interaction with LLMs through your computer. It supports any GGUF quant models created by the community, such as those from bartowski, MaziyarPanahi, and many others, available on Hugging Face. With Ollama, there’s no need to create a new Modelfile—you can use any of the 45,000+ public GGUF checkpoints onHugging Face Hub with a simple command. Customization options, including choosing the quantization type and system prompts, help enhance the overall user experience.

Getting started is straightforward:

ollama run hf.co/{username}/{repository}You can use either hf.co or huggingface.co as the domain. Here are some models you might want to try:

ollama run hf.co/bartowski/Llama-3.2-1B-Instruct-GGUF

ollama run hf.co/mlabonne/Meta-Llama-3.1-8B-Instruct-abliterated-GGUF

ollama run hf.co/arcee-ai/SuperNova-Medius-GGUF

ollama run hf.co/bartowski/Humanish-LLama3-8B-Instruct-GGUFCustom Quantization

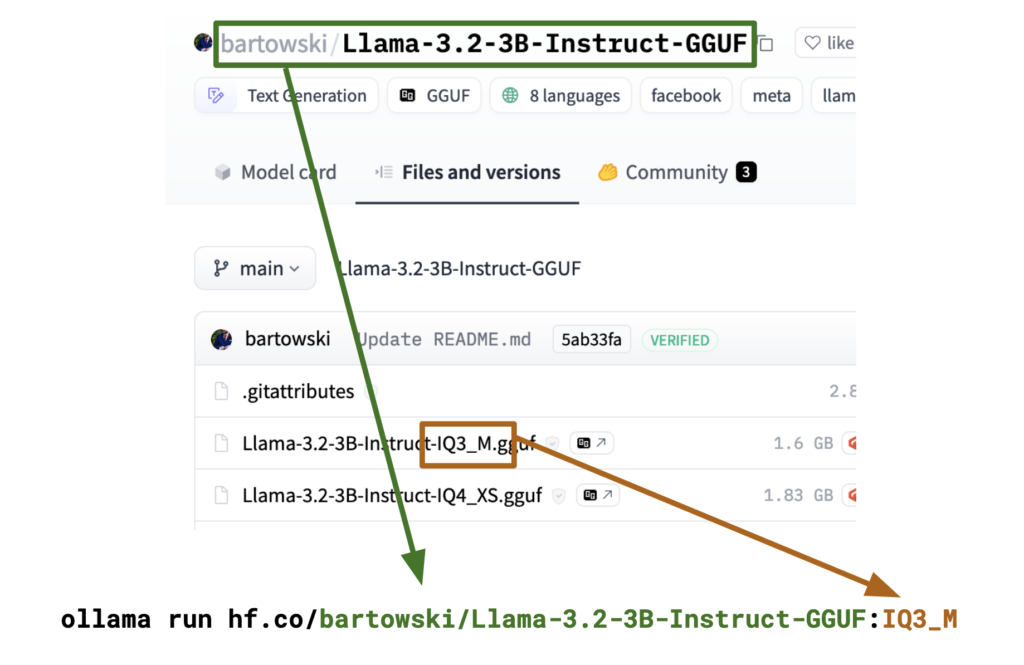

By default, the Q4_K_M quantization scheme is used when available in the model repository. If not, a suitable alternative is selected. To specify a different quantization scheme, add a tag:

ollama run hf.co/{username}/{repository}:{quantization}

For example:

ollama run hf.co/bartowski/Llama-3.2-3B-Instruct-GGUF:IQ3_M

ollama run hf.co/bartowski/Llama-3.2-3B-Instruct-GGUF:Q8_0Quantization names are case-insensitive, so this will also work:

ollama run hf.co/bartowski/Llama-3.2-3B-Instruct-GGUF:iq3_mYou can also use the full filename directly as a tag:

ollama run hf.co/bartowski/Llama-3.2-3B-Instruct-GGUF:Llama-3.2-3B-Instruct-IQ3_M.ggufCustom Chat Templates and Parameters

By default, Ollama selects a template from commonly used options, based on metadata from the GGUF file. If your GGUF file lacks a built-in template or you prefer to customize it, create a new template file in the repository using a Go template (not Jinja). Here’s an example:

{{ if .System }}<|system|>

{{ .System }}<|end|>

{{ end }}{{ if .Prompt }}<|user|>

{{ .Prompt }}<|end|>

{{ end }}<|assistant|>

{{ .Response }}<|end|>For more details on the Go template format, refer to the documentation.

You can also configure a system prompt by creating a system file in the repository, or adjust sampling parameters using a params file in JSON format. For the full list of available parameters, consult the documentation.